Zip Files:

History, Explanation and Implementation

(26 February 2020)

I have been curious about data compression and the Zip file format in particular for a long time. At some point I decided to address that by learning how it works and writing my own Zip program. The implementation turned into an exciting programming exercise; there is great pleasure to be had from creating a well oiled machine that takes data apart, jumbles its bits into a more efficient representation, and puts it all back together again. Hopefully it is interesting to read about too.

This article explains how the Zip file format and its compression scheme work in great detail: LZ77 compression, Huffman coding, Deflate and all. It tells some of the history, and provides a reasonably efficient example implementation written from scratch in C. The source code is available in hwzip-2.4.zip.

I have done my best to provide a bug-free implementation. If you find any issues, please let me know.

I am very grateful to Ange Albertini, Gynvael Coldwind, Fabian Giesen, Jonas Skeppstedt (web), Primiano Tucci, and Nico Weber who provided valuable feedback on draft versions of this material.

Update 2024-09-01: Tweak how compute_huffman_lengths limits the Huffman code lengths. The change is part of hwzip-2.4.zip.

Update 2023-06-10: Added a paragraph about how Info-Zip's zip and unzip programs, as well as gzip, could support Deflate before PKZip 2.04 had been released. Updated ctime2dos() to avoid converting dates outside the supported range. That change is part of hwzip-2.2.zip.

Update 2022-04-30: Hadrien Dorio ported hwzip to the Zig programming language. In the process he found an overflow bug in the compression ratio computation in zip_callback() and some incorrect uses of the CHECK macro in the unit tests. Those bugs have been fixed in hwzip-2.1.zip.

Update 2021-03-12: I wrote a follow-up article about the legacy Zip compression methods: Shrink, Reduce, and Implode. The code is in hwzip-2.0.zip.

Update 2020-10-11: In hwzip-1.4.zip, the distance2dist table was split into two smaller tables, reducing the binary size by 32 KB. It also ensures that Deflate blocks always emit at least two non-zero dist codeword lengths, for compatibility with old Zlib versions.

Update 2020-06-14: In hwzip-1.3.zip, the minimum Lempel–Ziv back reference length was increased from three to four bytes, and the hash was changed from a three-byte rolling hash to a four-byte multiplicative hash. This made Deflate compression more than twice as fast as the previous version.

Table of Contents

- History

- Lempel–Ziv Compression (LZ77)

- Huffman Coding

- Deflate

- The Zip File Format

- HWZip

- Conclusion

- Exercises

- Further Reading

- Linked Files

History

PKZip

Back in the eighties and early nineties, before the Internet became widely available, home computer enthusiasts used dial-up modems to connect to Bulletin Board Systems (BBSes) over the telephone network. A BBS is an interactive computer system that typically allows users to send messages, play games, and share files. All that was needed to go online was a computer, a modem, and the phone number of a good BBS—something that could be found in lists published by computer magazines, and on other BBSes.

One important tool for making file sharing easier was the archiver. An archiver stores one or more files into a single file, an archive, allowing the files to be stored or transferred as a single unit, and ideally also compresses them to save storage space and transfer time. One such archiver that was popular with the BBS scene was Arc, written by Thom Henderson of System Enhancement Associates (SEA), a small company he had founded with his brother-in-law.

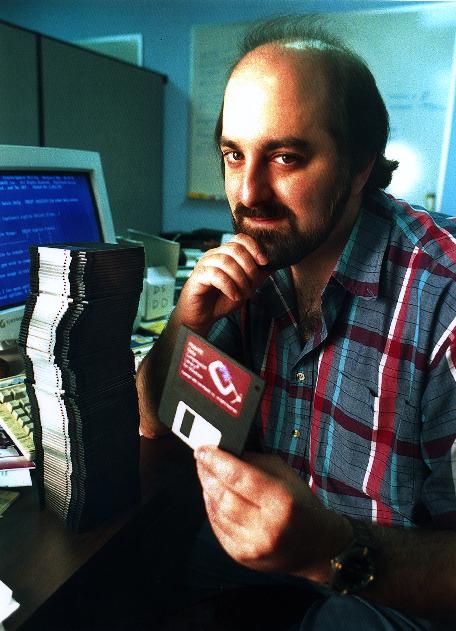

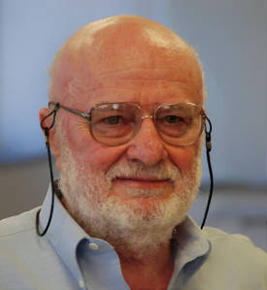

In the late eighties, a programmer named Phil Katz released his own Arc program, PKArc. It was compatible with SEA's Arc, but faster due to routines written in assembly, and it had a new compression method added by Katz. The program became popular, and Katz quit his job and founded PKWare to focus on developing it. According to legend, much of the work took place at his mother Hildegard's kitchen table in Glendale, Wisconsin.

Phil Katz (1962–2000). Photo from an article in the Milwaukee Sentinel, 19 September 1994.

SEA, however, were not thrilled by Katz's initiative. They sued for trademark violation and copyright infringement. The dispute and the surrounding debate in the BBS and PC world became known as the Arc Wars. In the end, the case was settled to SEA's advantage.

Moving on from Arc, in 1989 Katz created a new archive format which he named Zip and dedicated to the public domain:

The file format of the files created by these programs, which file format is original with the first release of this software, is hereby dedicated to the public domain. Further, the filename extension of ".ZIP", first used in connection with data compression software on the first release of this software, is also hereby dedicated to the public domain, with the fervent and sincere hope that it will not be attempted to be appropriated by anyone else for their exclusive use, but rather that it will be used to refer to data compression and librarying software in general, of a class or type which creates files having a format generally compatible with this software.

Katz's program for creating such files was called PKZip, and it was soon adopted by the BBS and PC world.

One aspect that most likely contributed to the Zip format's success was that PKZip came with a document, the Application Note, which explained exactly how the format works. This allowed others to study the format and create programs that create, extract, or otherwise interact with Zip files in a compatible way.

Zip is a lossless compression format: after decompression, the data is identical to what it was before compression. It works by finding redundancies in the source data and representing it more efficiently. This is different from lossy compression, used in image and sound formats such as JPEG and MP3, which work by removing features from the data which are less perceivable to the human eye or ear, etc.

PKZip was distributed as Shareware: it could be used and copied freely, but users were encouraged to "register" the program. For $47, one would receive a printed manual, premium support, and an enhanced version of the software.

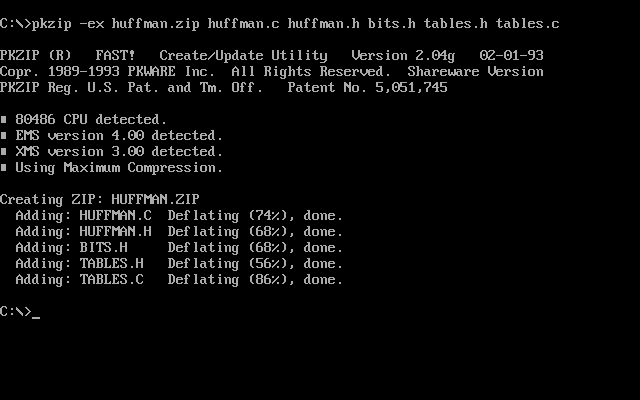

The seminal version of PKZip was 2.04c, from 28 December 1992, soon followed by bug fix release 2.04g. This introduced Deflate as the default compression method, and defined how Zip file compression would work going forward. (Boardwatch article about the release.)

The Zip format has since been adopted by many other file formats. For example, Java Archives (.jar files) and Android Application Packages (.apk files), as well as Microsoft Office .docx files, are all using the Zip format. Other file formats and protocols re-use the compression algorithm used in Zip files, Deflate. For example, this web page was most likely transferred to your web browser as a gzip file, a format which uses Deflate compression.

Phil Katz passed away in 2000. PKWare still exist and maintain the Zip format, though they focus mainly on data security software.

Info-Zip and Zlib

Soon after the release of PKZip in 1989, other programs to extract Zip files started showing up, in particular a program called unzip that could be used to extract Zip files on Unix systems. A mailing list called Info-Zip was set up in March 1990.

The Info-Zip group released the free and open-source unzip and zip programs, used to extract and create zip files. The code was ported to many systems and they are still the standard Zip programs used on Unix systems. This further helped increase the popularity of Zip files.

Although the Deflate compression method was first officially released with PKZip 2.04 in December 1992, it had been available in PKZip "alpha release" pkz193a.exe since October 1991. Based on that, Info-Zip added support for the new compression method to their programs, and officially released zip and unzip with Deflate support already in August 1992. This also explains how gzip could use Deflate compression, although its first release (October 1992 according to the changelog) predates PKZip 2.04.

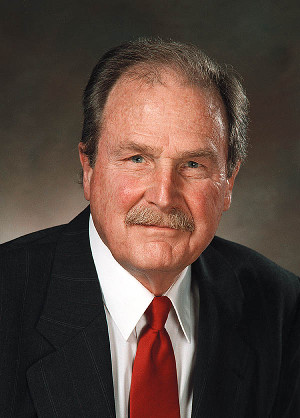

At some point, the Info-Zip code that performed the Deflate compression and decompression was extracted into a separate software library called Zlib, written by Jean-loup Gailly (compression) and Mark Adler (decompression).

Jean-loup Gailly (left) and Mark Adler (right) receiving the USENIX STUG Award in 2009.

One reason for creating the library was that this made it convenient to use Deflate compression in other applications and file formats, such as the new gzip file compression format, and the PNG image compression format. These new file formats had been proposed in order to replace the Compress and GIF file formats, which used the patent-encumbered LZW algorithm.

As part of developing those formats, a specification of Deflate was written by L Peter Deutsch and published as Internet RFC 1951 in May 1996. This provides an easier to follow description than the original PKZip Application Note.

Today, the use of Zlib is truly ubiquitous. It was probably responsible for both the compression of this page on the web server, and decompression in your web browser. Compression and decompression of most Zip and Zip-like files is now done with Zlib.

WinZip

Many people who did not use PKZip do remember using WinZip. As PC users moved from DOS to Windows, they also moved from PKZip to WinZip.

It started as a project by programmer Nico Mak, who was working on software for the OS/2 operating system at a company called Mansfield Software Group in Storrs-Mansfield, Connecticut. He was using Presentation Manager, the graphical user interface in OS/2, and was frustrated by how he had to switch from its File Manager to a DOS command prompt whenever he wanted to create or extract Zip files.

Mak wrote a simple graphical program to manage Zip files directly in Presentation Manager, named it PMZip, and released it as shareware in 1990.

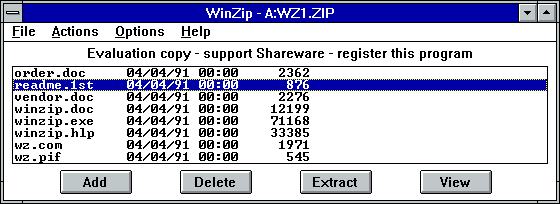

OS/2 never really took off; instead the PC world was moving to Microsoft Windows. In 1991, Mak decided to learn how to write Windows programs, and his first project was to port his Zip program to this new operating system. WinZip 1.00 was released in April 1991 as shareware with a 21-day evaluation period and $29 registration price. It looked like this:

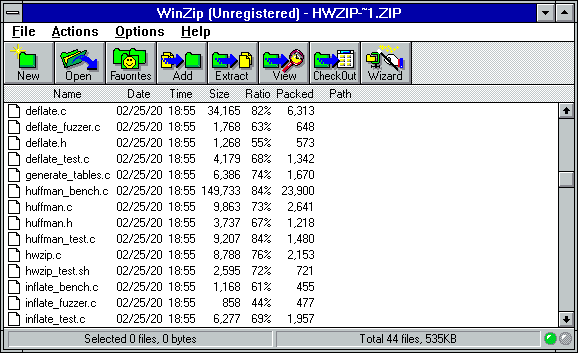

The first versions of WinZip used PKZip behind the scenes, but starting with version 5.0 in 1993 it uses the code from Info-Zip to manage Zip files directly. From its humble beginnings, the user interface evolved to different versions of the one below.

Screenshot of WinZip 6.3 running under Windows 3.11 for Workgroups.

WinZip was one of the most popular shareware programs during the nineties, but it eventually became less relevant as operating systems gained built-in support for Zip files. Windows manages Zip files as "compressed folders" since Windows ME (or Windows 98 with Plus! 98). The Windows integration was written by Dave Plummer and distributed as a shareware product before Microsoft acquired it. See Dave's video for the story.

Windows compressed folders use a library called DynaZip to manage Zip files under the hood. The library came about as Neil Rosenberg and Glen Horton at Inner Media needed a way to create Zip files in their screen capture/image management tool Collage. Based on an early version of Info-Zip, they built a Windows library which became very popular with developers.

Mak's company was originally called Nico Mak Computing. In 2000 it was renamed to WinZip Computing, and Mak seems to have left around this time. In 2005 the company was sold to Vector Capital, and it eventually ended up owned by Corel who still release WinZip as a product.

Lempel–Ziv Compression (LZ77)

There are two main ingredients in Zip compression: Lempel–Ziv compression and Huffman coding. This section describes the former.

One way of compressing text is to maintain a list of common words or phrases, and replace occurrences of those words in the text with references to the dictionary. For example, a long word such as "compression" in the original text might be represented more efficiently as #1234, where 1234 refers to the position in the word list. This is known as dictionary-based compression.

The dictionary method poses several problems for a general-purpose compression scheme. First, what should go in the dictionary? The original data might not be in English, and it might not even be human-readable text. And if the dictionary is not agreed upon between the compressing and decompressing parties beforehand, it needs to be stored and transmitted together with the compressed data, reducing the benefit of the compression.

One elegant solution to these problems is to use the original data itself as the dictionary. In their 1977 paper "A Universal Algorithm for Sequential Data Compression", Jacob Ziv and Abraham Lempel (both at Technion), propose a compression scheme where the original data is parsed into a sequence of triplets

(pointer, length, next)

where pointer and length form a back reference to a substring to be copied from a previous position in the original text, and next is the next character to output.

Abraham Lempel (1936–2023) and Jacob Ziv (1931–2023). Photos from Wikipedia (Staelin, CC BY 3.0) and Wikipedia.

For example, consider the snippet below.

It was the best of times,

it was the worst of times,

In the second line, the "t was the w" substring can be represented as (26, 10, w), because it can be recreated by copying 10 characters from the position 26 steps back, followed by a "w". Characters which have not occurred before use zero-length back references. For example, the initial "I" would be represented as (0, 0, I).

This form of compression is called Lempel–Ziv or LZ77 compression. However, real-world implementations typically do not use the next part of the triplets. Instead, they output single characters separately and use (distance, length) pairs for the back references. (This variant is called LZSS compression.) How the literals and back reference are encoded is a separate problem, and we will see how it is done in Deflate later.

As an example, the following text

It was the best of times,

it was the worst of times,

it was the age of wisdom,

it was the age of foolishness,

it was the epoch of belief,

it was the epoch of incredulity,

it was the season of Light,

it was the season of Darkness,

it was the spring of hope,

it was the winter of despair,

we had everything before us,

we had nothing before us,

we were all going direct to Heaven,

we were all going direct the other way

can be compressed into

It was the best of times, i(26,10)

wor(27,24)age(25,4)wisdom(26,20)

foolishnes(57,14)epoch(33,4)

belief(28,22)incredulity(33,13)

season(34,4)Light(28,23)Dark(120,17)

spring(31,4)hope(231,14)inter(27,4)

despair, we had everyth(57,4)before us(29,9)

no(26,20)were all go(29,4)

direct to Heaven(36,28)he other way

One exciting aspect of back references is that they can overlap with themselves, which happens when the length is greater than the distance. This is best illustrated by an example:

Fa-la-la-la-la

can be compressed into

Fa-la(3,9)

This may seem strange, but it works: once the first three "-la" bytes have been copied, the copying continues using the recently output bytes.

This is effectively a form of run-length encoding, where a piece of data is copied repeatedly up to a certain length.

See Colin Morris's Are Pop Lyrics Getting More Repetitive? article for an interactive example of Lempel–Ziv compression applied to song lyrics.

Expressed in C, a back reference can be copied out as shown below. Note that because of the possible self-overlap, we cannot use memcpy or memmove.

/* Output the (dist,len) back reference at dst_pos in dst. */

static inline void lz77_output_backref(uint8_t *dst, size_t dst_pos,

size_t dist, size_t len)

{

size_t i;

assert(dist <= dst_pos && "cannot reference before beginning of dst");

for (i = 0; i < len; i++) {

dst[dst_pos] = dst[dst_pos - dist];

dst_pos++;

}

}

Literals are trivial to output but we provide a utility function for completeness:

/* Output a literal byte at dst_pos in dst. */

static inline void lz77_output_lit(uint8_t *dst, size_t dst_pos, uint8_t lit)

{

dst[dst_pos] = lit;

}

Note that the caller of these functions is responsible for making sure there is enough room in dst for the output, and that the back reference does not try to go before the start of the buffer.

Of course the hard part is not to output back references during decompression, but rather how to find them in the first place when compressing the original data. There are different ways of doing that, but we will follow Zlib's hash table-based approach, which is also what RFC 1951 suggests.

The idea is to maintain a hash table with the positions of four-character prefixes that have occurred previously in the string (shorter back references are not considered profitable). For Deflate, only back references to the most recent 32,768 characters, the window, are allowed. This enables streaming compression: the input can be processed a little at a time, as long as the window with the most recent bytes are kept in memory. However, our implementation will assume that the full input is available and process it in one go, allowing us to focus on the compression instead of the bookkeeping required for streaming.

We will use two arrays: head maps the hash value of a four-letter prefix to a position in the input data, and prev maps a position to the previous position with the same hash value. In effect, head[h] is the head of a linked list of positions of prefixes with hash h, and prev[x] gets the element previous to x in the list.

#define LZ_WND_SIZE 32768

#define HASH_SIZE 15

#define NO_POS SIZE_MAX

#define MIN_LEN 4

/* Perform LZ77 compression on the src_len bytes in src, with back references

limited to a certain maximum distance and length, and with or without

self-overlap. Returns false as soon as either of the callback functions

returns false, otherwise returns true when all bytes have been processed. */

bool lz77_compress(const uint8_t *src, size_t src_len, size_t max_dist,

size_t max_len, bool allow_overlap,

bool (*lit_callback)(uint8_t lit, void *aux),

bool (*backref_callback)(size_t dist, size_t len, void *aux),

void *aux)

{

size_t head[1U << HASH_SIZE];

size_t prev[LZ_WND_SIZE];

uint32_t h;

size_t i, j, dist;

size_t match_len, match_pos;

size_t prev_match_len, prev_match_pos;

/* Initialize the hash table. */

for (i = 0; i < sizeof(head) / sizeof(head[0]); i++) {

head[i] = NO_POS;

}

To insert a new string position in the hash table, prev is updated to point to the previous head, and head is then updated:

static void insert_hash(uint32_t hash, size_t pos, size_t *head, size_t *prev)

{

assert(pos != NO_POS && "Invalid pos!");

prev[pos % LZ_WND_SIZE] = head[hash];

head[hash] = pos;

}

Note the modulo operation when indexing into prev: we only care about positions that fall inside the current window.

A simple function is used to compute the hash values: (read32le reads a 32-bit value in little-endian order, and is implemented in bits.h)

/* Compute a hash value based on four bytes pointed to by ptr. */

static uint32_t hash4(const uint8_t *ptr)

{

static const uint32_t HASH_MUL = 2654435761U;

/* Knuth's multiplicative hash. */

return (read32le(ptr) * HASH_MUL) >> (32 - HASH_SIZE);

}

The hash map can then be used to search efficiently for a previous match with a substring, as shown below. Searching for matches is the most computationally expensive part of the compression, so we limit how far back the list of potential matches we search.

Changing parameters such as how far back the list of prefixes to search (and whether to do lazy matching, described further down) is a way of trading less compression for more speed. The settings in our code are chosen to match those of Zlib's maximum compression level.

/* Find the longest most recent string which matches the string starting

* at src[pos]. The match must be strictly longer than prev_match_len and

* shorter or equal to max_match_len. Returns the length of the match if found

* and stores the match position in *match_pos, otherwise returns zero. */

static size_t find_match(const uint8_t *src, size_t pos, uint32_t hash,

size_t max_dist, size_t prev_match_len,

size_t max_match_len, bool allow_overlap,

const size_t *head, const size_t *prev,

size_t *match_pos)

{

size_t max_match_steps = 4096;

size_t i, l;

bool found;

size_t max_cmp;

if (prev_match_len == 0) {

/* We want backrefs of length MIN_LEN or longer. */

prev_match_len = MIN_LEN - 1;

}

if (prev_match_len >= max_match_len) {

/* A longer match would be too long. */

return 0;

}

if (prev_match_len >= 32) {

/* Do not try too hard if there is already a good match. */

max_match_steps /= 4;

}

found = false;

i = head[hash];

max_cmp = max_match_len;

/* Walk the linked list of prefix positions. */

for (i = head[hash]; i != NO_POS; i = prev[i % LZ_WND_SIZE]) {

if (max_match_steps == 0) {

break;

}

max_match_steps--;

assert(i < pos && "Matches should precede pos.");

if (pos - i > max_dist) {

/* The match is too far back. */

break;

}

if (!allow_overlap) {

max_cmp = min(max_match_len, pos - i);

if (max_cmp <= prev_match_len) {

continue;

}

}

l = cmp(src, i, pos, prev_match_len, max_cmp);

if (l != 0) {

assert(l > prev_match_len);

assert(l <= max_match_len);

found = true;

*match_pos = i;

prev_match_len = l;

if (l == max_match_len) {

/* A longer match is not possible. */

return l;

}

}

}

if (!found) {

return 0;

}

return prev_match_len;

}

/* Compare the substrings starting at src[i] and src[j], and return the length

* of the common prefix if it is strictly longer than prev_match_len

* and shorter or equal to max_match_len, otherwise return zero. */

static size_t cmp(const uint8_t *src, size_t i, size_t j,

size_t prev_match_len, size_t max_match_len)

{

size_t l;

assert(prev_match_len < max_match_len);

/* Check whether the first prev_match_len + 1 characters match. Do this

* backwards for a higher chance of finding a mismatch quickly. */

for (l = 0; l < prev_match_len + 1; l++) {

if (src[i + prev_match_len - l] !=

src[j + prev_match_len - l]) {

return 0;

}

}

assert(l == prev_match_len + 1);

/* Now check how long the full match is. */

for (; l < max_match_len; l++) {

if (src[i + l] != src[j + l]) {

break;

}

}

assert(l > prev_match_len);

assert(l <= max_match_len);

assert(memcmp(&src[i], &src[j], l) == 0);

return l;

}

With the code for finding previous matches in place, we can finish the lz77_compress function:

prev_match_len = 0;

prev_match_pos = NO_POS;

for (i = 0; i + MIN_LEN - 1 < src_len; i++) {

/* Search for a match using the hash table. */

h = hash4(&src[i]);

match_len = find_match(src, i, h, max_dist, prev_match_len,

min(max_len, src_len - i), allow_overlap,

head, prev, &match_pos);

/* Insert the current hash for future searches. */

insert_hash(h, i, head, prev);

/* If the previous match is at least as good as the current. */

if (prev_match_len != 0 && prev_match_len >= match_len) {

/* Output the previous match. */

dist = (i - 1) - prev_match_pos;

if (!backref_callback(dist, prev_match_len, aux)) {

return false;

}

/* Move past the match. */

for (j = i + 1; j < min((i - 1) + prev_match_len,

src_len - (MIN_LEN - 1)); j++) {

h = hash4(&src[j]);

insert_hash(h, j, head, prev);

}

i = (i - 1) + prev_match_len - 1;

prev_match_len = 0;

continue;

}

/* If no match (and no previous match), output literal. */

if (match_len == 0) {

assert(prev_match_len == 0);

if (!lit_callback(src[i], aux)) {

return false;

}

continue;

}

/* Otherwise the current match is better than the previous. */

if (prev_match_len != 0) {

/* Output a literal instead of the previous match. */

if (!lit_callback(src[i - 1], aux)) {

return false;

}

}

/* Defer this match and see if the next is even better. */

prev_match_len = match_len;

prev_match_pos = match_pos;

}

/* Output any previous match. */

if (prev_match_len != 0) {

dist = (i - 1) - prev_match_pos;

if (!backref_callback(dist, prev_match_len, aux)) {

return false;

}

i = (i - 1) + prev_match_len;

}

/* Output any remaining literals. */

for (; i < src_len; i++) {

if (!lit_callback(src[i], aux)) {

return false;

}

}

return true;

}

The code looks for the longest possible back reference that could be emitted at the current position. However, before outputting that back reference, it considers whether an even longer match could be found at the next position. Zlib calls this lazy match evaluation. This is still a greedy algorithm: it chooses the longest match, even though a shorter match now might allow for a longer match later and better compression overall.

Lempel–Ziv compression can be both fast and slow. Zopfli spends a lot of time trying to find optimal back references to squeeze out a few extra percent of compression. This is useful for data that is compressed once and used many times, such as static content on a web server. On the other end of the spectrum are compressors such as Snappy and LZ4, which match only against the most recent 4-byte prefix and run very fast. Such compression can be useful in database or RPC systems, where a short moment spent compressing is paid off by time savings when sending data over the network or to and from disk.

The Lempel–Ziv idea of using the source data itself as the dictionary is very elegant, but using a static dictionary can still be beneficial. Brotli is an LZ77-based compression algorithm, but it also uses a large static dictionary of strings that occur frequently on the web.

The LZ77 code is available in lz77.h and lz77.c.

Huffman Coding

The second ingredient in Zip compression is Huffman coding.

The term code in this context refers to a system for representing some data in another form. For our purposes, we are interested in codes that can be used to represent the literals and back references produced by the Lempel–Ziv compression above efficiently.

Computers traditionally represent English text using the American Standard Code for Information Interchange (ASCII). That code assigns a number to each character, and computers typically store each such number in an 8-bit byte. For example, the text you are reading now is originally stored like that. Here is the ASCII code for the upper-case English alphabet:

| A | 01000001 | N | 01001110 |

|---|---|---|---|

| B | 01000010 | O | 01001111 |

| C | 01000011 | P | 01010000 |

| D | 01000100 | Q | 01010001 |

| E | 01000101 | R | 01010010 |

| F | 01000110 | S | 01010011 |

| G | 01000111 | T | 01010100 |

| H | 01001000 | U | 01010101 |

| I | 01001001 | V | 01010110 |

| J | 01001010 | W | 01010111 |

| K | 01001011 | X | 01011000 |

| L | 01001100 | Y | 01011001 |

| M | 01001101 | Z | 01011010 |

Using one byte per character is a convenient way of storing text. It makes it easy to access or change parts of the text, and it is obvious how many bytes are required to store N characters or how many characters are stored in N bytes. However, it is not the most space efficient way. For example, E and Z are the most and least used characters in English text, respectively. Therefore it would be more space efficient to use a shorter bit representation for E and a longer for Z, instead of using the same number of bits for each character.

A code that specifies different-length codewords for different source symbols is called a variable-length code. The most famous example is Morse code, which encodes symbols using dots and dashes, originally transmitted as short and long electric pulses over a telegraph wire:

| A | • − | N | − • |

|---|---|---|---|

| B | − • • • | O | − − − |

| C | − • − • | P | • − − • |

| D | − • • | Q | − − • − |

| E | • | R | • − • |

| F | • • − • | S | • • • |

| G | − − • | T | − |

| H | • • • • | U | • • − |

| I | • • | V | • • • − |

| J | • − − − | W | • − − |

| K | − • − | X | − • • − |

| L | • − • • | Y | − • − − |

| M | − − | Z | − − • • |

One problem with Morse code is that one codeword can be the prefix of another. For example, • • − • is not uniquely decodable: it could mean either F or ER. This problem is solved by making pauses (the length of three dots) between letters during transmission. However, a better solution would be if no codeword was the prefix of another. Such a code is called a prefix-free code, or sometimes just prefix code. The fixed-length ASCII code above is trivially prefix-free since the codewords are all the same length, but variable-length codes can also be prefix-free. Telephone numbers are mostly prefix-free. Before the 112 emergency telephone number was adopted in Sweden, all existing phone numbers starting with 112 had to be changed, and nobody in the US has a phone number starting with 911.

To minimize the size of an encoded message, we would like a prefix-free code where frequently occurring symbols have shorter codewords than infrequent ones. The optimum code would be one which generates the shortest possible result, that is, a code where the sum of the codeword lengths multiplied by their frequency of occurrence is as small as possible. This is called a minimum-redundancy prefix-free code, or these days a Huffman code after the man who invented an efficient algorithm for constructing them.

Huffman's Algorithm

While studying for his doctorate in electrical engineering at MIT, David A. Huffman took a course in information theory taught by Robert Fano. According to legend, Fano gave his students a choice between taking a final exam or writing a term paper. Huffman chose the latter, and was assigned the topic of finding minimum-redundancy prefix-free codes. Huffman was allegedly not aware that this was an open problem which Fano himself had worked on (the best known method at the time was Shannon-Fano coding). Huffman's paper was published as A Method for the Construction of Minimum-Redundancy Codes in 1952, and the algorithm has been widely used ever since.

David A. Huffman (1925–1999). Photo from UC Santa Cruz press release.

Huffman's algorithm creates a minimum-redundancy prefix-free code for a set of symbols and their frequencies of use. The algorithm works by repeatedly selecting the two symbols, say X and Y, with the lowest frequencies from the set, and replacing them with a single composite symbol which represents "X or Y". The frequency of the composite symbol is the sum of the frequencies of the two original symbols. The codewords for X and Y can be whatever codeword gets assigned to the composite "X or Y" symbol, followed by a 0 or 1 to differentiate between the two original symbols. When the set has been reduced to a single symbol, the algorithm is done. (See this video for a good explanation.)

Below is an example of running the algorithm on a small set of symbols:

| Symbol | Frequency |

|---|---|

| A | 6 |

| B | 4 |

| C | 2 |

| D | 3 |

Initially, the set of symbols to be processed (coloured blue) is our original symbols:

The two lowest-frequency symbols, C and D, are removed from the set, and replaced by a composite symbol whose frequency is the sum of C and D's frequencies.

The lowest-frequency symbols are now B and the composite symbol with frequency five. These are removed from the set, and a new composite symbol with frequency nine is inserted instead:

Finally, A and the composite node with frequency 9 have the lowest frequencies, and so a composite node with frequency 15 is inserted.

Since there is only one node left in the set, the algorithm is finished.

The algorithm leaves us with a structure called a Huffman tree. Note how it has our input symbols as leaves, and symbols with higher frequency are closer to the top. We can derive codewords for our symbols from this tree by starting at the root, walking towards a symbol, and adding a 0 or 1 to the codeword when going left or right, respectively. If we do that, we end up with:

| Symbol | codeword |

|---|---|

| A | 0 |

| B | 10 |

| C | 110 |

| D | 111 |

Note how none of the codewords are a prefix of another, and how the symbols with higher frequency have shorter codewords.

The tree can also be used for decoding: start at the root and go left or right for 0 or 1 until a symbol is reached. For example, the string 010100 decodes to ABBA.

Note that the length of each codeword equals the depth of the corresponding node in the tree. As we will see in the next section, we do not need the actual tree to assign codewords; knowing the lengths of the codewords is enough. Therefore, the output of our implementation of Huffman's algorithm will be those codeword lengths.

To store the set of symbols and efficiently find the one with lowest frequency, we use a binary heap data structure, specifically a min-heap since we want the minimum value on top.

/* Swap the 32-bit values pointed to by a and b. */

static void swap32(uint32_t *a, uint32_t *b)

{

uint32_t tmp;

tmp = *a;

*a = *b;

*b = tmp;

}

/* Move element i in the n-element heap down to restore the minheap property. */

static void minheap_down(uint32_t *heap, size_t n, size_t i)

{

size_t left, right, min;

assert(i >= 1 && i <= n && "i must be inside the heap");

/* While the i-th element has at least one child. */

while (i * 2 <= n) {

left = i * 2;

right = i * 2 + 1;

/* Find the child with lowest value. */

min = left;

if (right <= n && heap[right] < heap[left]) {

min = right;

}

/* Move i down if it is larger. */

if (heap[min] < heap[i]) {

swap32(&heap[min], &heap[i]);

i = min;

} else {

break;

}

}

}

/* Establish minheap property for heap[1..n]. */

static void minheap_heapify(uint32_t *heap, size_t n)

{

size_t i;

/* Floyd's algorithm. */

for (i = n / 2; i >= 1; i--) {

minheap_down(heap, n, i);

}

}

To keep track of the frequency of up to n symbols we will use a heap of n elements. In addition, every time we create a composite symbol, we want to "link" the two original symbols to the new one. So each symbol will also have a "link element".

We will use a single array of n * 2 + 1 elements to store the n-element heap and the n link elements. When two symbols in the heap are replaced by one, we will use the leftover array element to store the link of the new symbol. This is based on the implementation in Witten, Moffat and Bell's Managing Gigabytes.

In each heap node, we will use the upper 16 bits to store the symbol's frequency, and the lower 16 bits to store the index of the symbol's link element. By using the upper bits, the difference in frequency will determine the outcome of 32-bit comparisons between two heap elements.

Because of this representation, we must be sure that a symbol's frequency always fits within 16 bits. When the algorithm is finished, the final composite symbol will have the frequency of all original symbols combined, so therefore this sum must fit within 16 bits. Our Deflate implementation will make sure of this by processing at most 65,535 symbols at a time.

Symbols with zero frequency will receive a codeword length of zero and not take part in the construction of the code.

If a codeword exceeds the maximum length, we "flatten" the distribution of symbol frequencies by right-shifting them and try again (yes, with a goto). There are more sophisticated ways of doing length-limited Huffman coding, but this is simple and effective.

#define MAX_HUFFMAN_SYMBOLS 288 /* Deflate uses max 288 symbols. */

/* Construct a Huffman code for n symbols with the frequencies in freq, and

* codeword length limited to max_len. The sum of the frequencies must be <=

* UINT16_MAX. max_len must be large enough that a code is always possible,

* i.e. 2 ** max_len >= n. Symbols with zero frequency are not part of the code

* and get length zero. Outputs the codeword lengths in lengths[0..n-1]. */

static void compute_huffman_lengths(const uint16_t *freqs, size_t n,

uint8_t max_len, uint8_t *lengths)

{

uint32_t nodes[MAX_HUFFMAN_SYMBOLS * 2 + 1], p, q;

uint16_t freq;

size_t i, h, l;

uint16_t freq_shift = 0;

#ifndef NDEBUG

uint32_t freq_sum = 0;

for (i = 0; i < n; i++) {

freq_sum += freqs[i];

}

assert(freq_sum <= UINT16_MAX && "Frequency sum too large!");

#endif

assert(n <= MAX_HUFFMAN_SYMBOLS);

assert((1U << max_len) >= n && "max_len must be large enough");

try_again:

/* Initialize the heap. h is the heap size. */

h = 0;

for (i = 0; i < n; i++) {

freq = freqs[i];

if (freq == 0) {

continue; /* Ignore zero-frequency symbols. */

}

/* Shift frequencies down towards 1. */

freq >>= freq_shift;

if (freq == 0) {

freq = 1;

}

/* High 16 bits: Symbol frequency.

Low 16 bits: Symbol link element index. */

h++;

nodes[h] = ((uint32_t)freq << 16) | (uint32_t)(n + h);

}

minheap_heapify(nodes, h);

/* Special case for fewer than two non-zero symbols. */

if (h < 2) {

for (i = 0; i < n; i++) {

lengths[i] = (freqs[i] == 0) ? 0 : 1;

}

return;

}

/* Build the Huffman tree. */

while (h > 1) {

/* Remove the lowest frequency node p from the heap. */

p = nodes[1];

nodes[1] = nodes[h--];

minheap_down(nodes, h, 1);

/* Get q, the next lowest frequency node. */

q = nodes[1];

/* Replace q with a new symbol with the combined frequencies of

p and q, and with the no longer used h+1'th node as the

link element. */

nodes[1] = ((p & 0xffff0000) + (q & 0xffff0000))

| (uint32_t)(h + 1);

/* Set the links of p and q to point to the link element of

the new node. */

nodes[p & 0xffff] = nodes[q & 0xffff] = (uint32_t)(h + 1);

/* Move the new symbol down to restore heap property. */

minheap_down(nodes, h, 1);

}

/* Compute the codeword length for each symbol. */

h = 0;

for (i = 0; i < n; i++) {

if (freqs[i] == 0) {

lengths[i] = 0;

continue;

}

h++;

/* Link element for the i-th symbol. */

p = nodes[n + h];

/* Follow the links until we hit the root (link index 2). */

l = 1;

while (p != 2) {

l++;

p = nodes[p];

}

if (l > max_len) {

/* Push freqs lower. */

assert(freq_shift != 15 && "freq_shift too high!");

freq_shift++;

goto try_again;

}

assert(l <= UINT8_MAX);

lengths[i] = (uint8_t)l;

}

}

An elegant alternative to the binary heap approach is to store the symbols in two queues. The first queue contains the original symbols, sorted by frequency. When a composite symbol is created, it is added to the second queue. This way, the lowest-frequency symbol will always be found at the front of one of the queues. This was described by Jan van Leeuwen in On the Construction of Huffman Trees (1976).

While Huffman codes are optimal as far as prefix-free codes go, there are more efficient ways to encode data beyond prefix coding, such as Arithmetic coding and Asymmetric numeral systems.

Canonical Huffman Codes

In the earlier example we ended up with the Huffman tree below.

By walking down the tree from the root and using 0 for left branches and 1 for right branches, we ended up with the following code:

| Symbol | codeword |

|---|---|

| A | 0 |

| B | 10 |

| C | 110 |

| D | 111 |

The decision to use 0 for left 1 for right branches seems arbitrary. If we do the reverse we get:

| Symbol | codeword |

|---|---|

| A | 1 |

| B | 01 |

| C | 001 |

| D | 000 |

In fact, we can label the two edges from a node with 0 or 1 arbitrarily (as long as the labels are different) and still end up with an equivalent code:

| Symbol | codeword |

|---|---|

| A | 0 |

| B | 11 |

| C | 100 |

| D | 101 |

This shows that while Huffman's algorithm gives the requisite codeword lengths for a minimum-redundancy prefix-free code, there are many ways of assigning the individual codewords.

Given codeword lengths computed by Huffman's algorithm, a Canonical Huffman code assigns codewords to symbols in a specific way. This is useful because it makes it sufficient to store and transmit the codeword lengths with the compressed data: the decoder can reconstruct the codewords based on the lengths. (One could of course also store and transmit the symbol frequencies and run Huffman's algorithm in the decoder, but that would require more work for the decoder and likely more storage space too.) Another very important property is that the structure of canonical codes facilitates efficient decoding.

The idea is to assign codewords to the symbols sequentially, one codeword length at a time. The initial codeword is 0. The next codeword of some length is the previous one plus 1. The first codeword of length N is constructed by taking the last codeword of length N-1, adding one (to get a new codeword) and shifting left one step (to increase the length).

Viewed in terms of a Huffman tree, codewords are assigned in sequence to the leaves in left-to-right order, one level at a time, shifting left when we move down one level.

In our A, B, C, D example, Huffman's algorithm gave codeword lengths 1,2,3,3. The first codeword is 0. That is also the last codeword of length 1. For length 2, we take the 0, add 1 to get the next code which will be the prefix of the two-bit codes: we shift it left and obtain 10. That is also the last codeword of length 2. To get to length 3, we add one and shift: 110. To get the next one of length 3, we add one: 111.

| Symbol | codeword |

|---|---|

| A | 0 |

| B | 10 |

| C | 110 |

| D | 111 |

The implementation for generating the canonical codes is shown below. Note that the Deflate algorithm expects codewords to be emitted LSB-first, that is, the first bit of a codeword should be stored in the least significant bit. This means we have to reverse the bits, which can be done using a lookup table.

#define MAX_HUFFMAN_BITS 16 /* Implode uses max 16-bit codewords. */

static void compute_canonical_code(uint16_t *codewords, const uint8_t *lengths,

size_t n)

{

size_t i;

uint16_t count[MAX_HUFFMAN_BITS + 1] = {0};

uint16_t code[MAX_HUFFMAN_BITS + 1];

int l;

/* Count the number of codewords of each length. */

for (i = 0; i < n; i++) {

count[lengths[i]]++;

}

count[0] = 0; /* Ignore zero-length codes. */

/* Compute the first codeword for each length. */

code[0] = 0;

for (l = 1; l <= MAX_HUFFMAN_BITS; l++) {

code[l] = (uint16_t)((code[l - 1] + count[l - 1]) << 1);

}

/* Assign a codeword for each symbol. */

for (i = 0; i < n; i++) {

l = lengths[i];

if (l == 0) {

continue;

}

codewords[i] = reverse16(code[l]++, l); /* Make it LSB-first. */

}

}

/* Reverse the n least significant bits of x.

The (16 - n) most significant bits of the result will be zero. */

static inline uint16_t reverse16(uint16_t x, int n)

{

uint16_t lo, hi;

uint16_t reversed;

assert(n > 0);

assert(n <= 16);

lo = x & 0xff;

hi = x >> 8;

reversed = (uint16_t)((reverse8_tbl[lo] << 8) | reverse8_tbl[hi]);

return reversed >> (16 - n);

}

With all the parts now in place, we can write the code to initialize the encoder:

typedef struct huffman_encoder_t huffman_encoder_t;

struct huffman_encoder_t {

uint16_t codewords[MAX_HUFFMAN_SYMBOLS]; /* LSB-first codewords. */

uint8_t lengths[MAX_HUFFMAN_SYMBOLS]; /* Codeword lengths. */

};

/* Initialize a Huffman encoder based on the n symbol frequencies. */

void huffman_encoder_init(huffman_encoder_t *e, const uint16_t *freqs, size_t n,

uint8_t max_codeword_len)

{

assert(n <= MAX_HUFFMAN_SYMBOLS);

assert(max_codeword_len <= MAX_HUFFMAN_BITS);

compute_huffman_lengths(freqs, n, max_codeword_len, e->lengths);

compute_canonical_code(e->codewords, e->lengths, n);

}

We also provide a function for setting up an encoder based on already computed code lengths:

/* Initialize a Huffman encoder based on the n codeword lengths. */

void huffman_encoder_init2(huffman_encoder_t *e, const uint8_t *lengths,

size_t n)

{

size_t i;

for (i = 0; i < n; i++) {

e->lengths[i] = lengths[i];

}

compute_canonical_code(e->codewords, e->lengths, n);

}

Efficient Huffman Decoding

The most basic way of doing Huffman decoding is to walk the Huffman tree from the root, reading one bit of input at a time to decide whether to take the next left or right branch. Once a leaf node is reached, that is the decoded symbol.

The method above is often taught at universities and in textbooks. It is simple and elegant, but processing one bit at a time is relatively slow. A very fast way of decoding is to use a lookup table. For the code above where the max codeword length is three bits, we could use the following table:

| Bits | Symbol | Codeword Length |

|---|---|---|

| 000 | A | 1 |

| 001 | A | 1 |

| 010 | A | 1 |

| 011 | A | 1 |

| 100 | B | 2 |

| 101 | B | 2 |

| 110 | C | 3 |

| 111 | D | 3 |

Although there are only four symbols, the table needs to have eight entries to cover all possible three-bit inputs. Symbols with codewords shorter than three bits have multiple entries in the table. For example, the 10 codeword has been "padded" to 100 and 101 to cover all three-bit inputs starting with 10.

To perform decoding using this method, one would index into the table using the next three bits of input, and immediately find the corresponding symbol and its codeword length. The length is important, because even though we looked at the next three bits, we should only consume as many bits of input as the actual codeword is long.

The lookup table approach is very fast, but there is a downside: the table size doubles with each extra bit of codeword length. This means that building the table becomes exponentially slower, and using it may also become slower if it no longer fits in the CPU's cache.

Because of this, a lookup table is typically only used for codewords up to a certain length, and some other approach is used for longer codewords. As Huffman coding assigns shorter codewords to more frequent symbols, using a lookup table for short codewords is a great optimization for the common case.

The method used by Zlib is to have multiple levels of lookup tables. If a codeword is too long for the first table, the table entry will point to a secondary table, to be indexed with the remaining bits.

However, there is another very elegant method based on the properties of canonical Huffman codes. This is described in On the Implementation of Minimum Redundancy Prefix Codes (Moffat and Turpin 1997) and further explained in Charles Bloom's The Lost Huffman Paper.

Consider the codewords from our canonical code above: 0, 10, 110, 111. We will keep track of the first codeword of each length, and where in the sequence of assigned codewords it is, the "symbol index".

| Codeword Length | First Codeword | First Symbol Index |

|---|---|---|

| 1 | 0 | 1 (A) |

| 2 | 10 | 2 (B) |

| 3 | 110 | 3 (C) |

Because the codewords are assigned sequentially, once we know how many bits of input to consider, the table above lets us find out what symbol index those bits represent. For example, for the 3-bit input 111, we see that this is at offset 1 from the first codeword of that length (110). The first symbol index of that length is 3, and the offset of 1 takes us to symbol index 4. Another table maps the symbol index to the symbol:

sym_idx = d->first_symbol[len] + (bits - d->first_code[len]);

sym = d->syms[sym_idx];

As a small optimization, instead of storing the first symbol index and first codeword separately, we can store the first symbol index minus the first codeword in a table:

sym_idx = d->offset_first_sym_idx[len] + bits;

sym = d->syms[sym_idx];

To determine how many bits of input to consider, we again use the sequential property of the code. In our example code, the valid 1-bit codewords are all strictly less than 1, the 2-bit codewords are strictly less than 11, and the 3-bit codewords are strictly less than 1000 (trivially true for all 3-bit values). In other words, a valid N-bit codeword must be strictly less than the first N-bit codeword plus the number of N-bit codewords. What is even more exciting is that we can left-shift those limits so that they are all 3 bits wide. Let us call them the sentinel bits for each codeword length:

| Codeword Length | Sentinel Bits |

|---|---|

| 1 | 100 |

| 2 | 110 |

| 3 | 1000 |

(The length 3 sentinel has overflowed to 4 bits, but that just means any 3-bit input will do.)

This means we can look at three bits of input and compare against the sentinel bits to figure out how long our codeword is. Once that is done, we shift the input bits as to only consider the right number of them, and then find the symbol index as shown above:

for (len = 1; len <= 3; len++) {

if (bits < d->sentinel_bits[len]) {

bits >>= 3 - len; /* Get the len most significant bits. */

sym_idx = d->offset_first_sym_idx[len] + bits;

}

}

The time complexity of this is linear in the number of codeword bits, but it is space efficient, requires only a load and comparison per step, and since shorter codewords are more frequent it optimizes for the common case.

The full decoder is shown below:

#define HUFFMAN_LOOKUP_TABLE_BITS 8 /* Seems a good trade-off. */

typedef struct huffman_decoder_t huffman_decoder_t;

struct huffman_decoder_t {

/* Lookup table for fast decoding of short codewords. */

struct {

uint16_t sym : 9; /* Wide enough to fit the max symbol nbr. */

uint16_t len : 7; /* 0 means no symbol. */

} table[1U << HUFFMAN_LOOKUP_TABLE_BITS];

/* "Sentinel bits" value for each codeword length. */

uint32_t sentinel_bits[MAX_HUFFMAN_BITS + 1];

/* First symbol index minus first codeword mod 2**16 for each length. */

uint16_t offset_first_sym_idx[MAX_HUFFMAN_BITS + 1];

/* Map from symbol index to symbol. */

uint16_t syms[MAX_HUFFMAN_SYMBOLS];

#ifndef NDEBUG

size_t num_syms;

#endif

};

/* Get the n least significant bits of x. */

static inline uint64_t lsb(uint64_t x, size_t n)

{

assert(n <= 63);

return x & (((uint64_t)1 << n) - 1);

}

/* Use the decoder d to decode a symbol from the LSB-first zero-padded bits.

* Returns the decoded symbol number or -1 if no symbol could be decoded.

* *num_used_bits will be set to the number of bits used to decode the symbol,

* or zero if no symbol could be decoded. */

static inline int huffman_decode(const huffman_decoder_t *d, uint16_t bits,

size_t *num_used_bits)

{

uint64_t lookup_bits;

size_t l;

size_t sym_idx;

/* First try the lookup table. */

lookup_bits = lsb(bits, HUFFMAN_LOOKUP_TABLE_BITS);

assert(lookup_bits < sizeof(d->table) / sizeof(d->table[0]));

if (d->table[lookup_bits].len != 0) {

assert(d->table[lookup_bits].len <= HUFFMAN_LOOKUP_TABLE_BITS);

assert(d->table[lookup_bits].sym < d->num_syms);

*num_used_bits = d->table[lookup_bits].len;

return d->table[lookup_bits].sym;

}

/* Then do canonical decoding with the bits in MSB-first order. */

bits = reverse16(bits, MAX_HUFFMAN_BITS);

for (l = HUFFMAN_LOOKUP_TABLE_BITS + 1; l <= MAX_HUFFMAN_BITS; l++) {

if (bits < d->sentinel_bits[l]) {

bits >>= MAX_HUFFMAN_BITS - l;

sym_idx = (uint16_t)(d->offset_first_sym_idx[l] + bits);

assert(sym_idx < d->num_syms);

*num_used_bits = l;

return d->syms[sym_idx];

}

}

*num_used_bits = 0;

return -1;

}

To set up the decoder, we compute the canonical code similarly to huffman_encoder_init and fill in the various tables:

/* Initialize huffman decoder d for a code defined by the n codeword lengths.

Returns false if the codeword lengths do not correspond to a valid prefix

code. */

bool huffman_decoder_init(huffman_decoder_t *d, const uint8_t *lengths,

size_t n)

{

size_t i;

uint16_t count[MAX_HUFFMAN_BITS + 1] = {0};

uint16_t code[MAX_HUFFMAN_BITS + 1];

uint32_t s;

uint16_t sym_idx[MAX_HUFFMAN_BITS + 1];

int l;

#ifndef NDEBUG

assert(n <= MAX_HUFFMAN_SYMBOLS);

d->num_syms = n;

#endif

/* Zero-initialize the lookup table. */

for (i = 0; i < sizeof(d->table) / sizeof(d->table[0]); i++) {

d->table[i].len = 0;

}

/* Count the number of codewords of each length. */

for (i = 0; i < n; i++) {

assert(lengths[i] <= MAX_HUFFMAN_BITS);

count[lengths[i]]++;

}

count[0] = 0; /* Ignore zero-length codewords. */

/* Compute sentinel_bits and offset_first_sym_idx for each length. */

code[0] = 0;

sym_idx[0] = 0;

for (l = 1; l <= MAX_HUFFMAN_BITS; l++) {

/* First canonical codeword of this length. */

code[l] = (uint16_t)((code[l - 1] + count[l - 1]) << 1);

if (count[l] != 0 && code[l] + count[l] - 1 > (1 << l) - 1) {

/* The last codeword is longer than l bits. */

return false;

}

s = (uint32_t)((code[l] + count[l]) << (MAX_HUFFMAN_BITS - l));

d->sentinel_bits[l] = s;

assert(d->sentinel_bits[l] >= code[l] && "No overflow!");

sym_idx[l] = sym_idx[l - 1] + count[l - 1];

d->offset_first_sym_idx[l] = sym_idx[l] - code[l];

}

/* Build mapping from index to symbol and populate the lookup table. */

for (i = 0; i < n; i++) {

l = lengths[i];

if (l == 0) {

continue;

}

d->syms[sym_idx[l]] = (uint16_t)i;

sym_idx[l]++;

if (l <= HUFFMAN_LOOKUP_TABLE_BITS) {

table_insert(d, i, l, code[l]);

code[l]++;

}

}

return true;

}

static void table_insert(huffman_decoder_t *d, size_t sym, int len,

uint16_t codeword)

{

int pad_len;

uint16_t padding, index;

assert(len <= HUFFMAN_LOOKUP_TABLE_BITS);

codeword = reverse16(codeword, len); /* Make it LSB-first. */

pad_len = HUFFMAN_LOOKUP_TABLE_BITS - len;

/* Pad the pad_len upper bits with all bit combinations. */

for (padding = 0; padding < (1U << pad_len); padding++) {

index = (uint16_t)(codeword | (padding << len));

d->table[index].sym = (uint16_t)sym;

d->table[index].len = (uint16_t)len;

assert(d->table[index].sym == sym && "Fits in bitfield.");

assert(d->table[index].len == len && "Fits in bitfield.");

}

}

Deflate

Deflate, introduced with PKZip 2.04c in 1993, is the default compression method in modern Zip files. It is also the compression method used in gzip, PNG, and many other file formats. It uses LZ77 compression and Huffman coding in a combination which will be described and implemented in this section.

Before Deflate, PKZip used compression methods called Shrink, Reduce, and Implode. Although those methods are rarely seen in use today, they were still in use some time after the introduction of Deflate since they required less memory. Those legacy methods are covered in a follow-up article.

Bitstreams

Deflate stores Huffman codewords in a least-significant-bit-first (LSB-first) bitstream, meaning that the first bit of the stream is stored in the least significant bit of the first byte.

For example, consider this bit stream (read left-to-right): 1-0-0-1-1. When stored LSB-first in a byte, the byte's value becomes 0b00011001 (binary) or 0x19 (hexadecimal). This might seem backwards (in a sense it is), but one advantage is that it makes it easy to get the first N bits from a computer word: just mask off the N lowest bits.

The following routines are from bitstream.h.

/* Input bitstream. */

typedef struct istream_t istream_t;

struct istream_t {

const uint8_t *src; /* Source bytes. */

const uint8_t *end; /* Past-the-end byte of src. */

size_t bitpos; /* Position of the next bit to read. */

size_t bitpos_end; /* Position of past-the-end bit. */

};

/* Initialize an input stream to present the n bytes from src as an LSB-first

* bitstream. */

static inline void istream_init(istream_t *is, const uint8_t *src, size_t n)

{

is->src = src;

is->end = src + n;

is->bitpos = 0;

is->bitpos_end = n * 8;

}

For our Huffman decoder, we want to look at the next bits in the stream (enough bits for the longest possible codeword), and then advance the stream by the number of bits used by the decoded symbol:

#define ISTREAM_MIN_BITS (64 - 7)

/* Get the next bits from the input stream. The number of bits returned is

* between ISTREAM_MIN_BITS and 64, depending on the position in the stream, or

* fewer if the end of stream is reached. The upper bits are zero-padded. */

static inline uint64_t istream_bits(const istream_t *is)

{

const uint8_t *next;

uint64_t bits;

int i;

next = &is->src[is->bitpos / 8];

assert(next <= is->end && "Cannot read past end of stream.");

if (is->end - next >= 8) {

/* Common case: read 8 bytes in one go. */

bits = read64le(next);

} else {

/* Read the available bytes and zero-pad. */

bits = 0;

for (i = 0; i < is->end - next; i++) {

bits |= (uint64_t)next[i] << (i * 8);

}

}

return bits >> (is->bitpos % 8);

}

/* Advance n bits in the bitstream if possible. Returns false if that many bits

* are not available in the stream. */

static inline bool istream_advance(istream_t *is, size_t n) {

assert(is->bitpos <= is->bitpos_end);

if (is->bitpos_end - is->bitpos < n) {

return false;

}

is->bitpos += n;

return true;

}

The intention is that in the common case, istream_bits can execute as a single load instruction and some arithmetic on 64-bit machines, assuming the members of the istream_t struct are available in registers. read64le is implemented in bits.h (modern compilers translate it to a single 64-bit load on little-endian):

/* Read a 64-bit value from p in little-endian byte order. */

static inline uint64_t read64le(const uint8_t *p)

{

/* The one true way, see

* https://commandcenter.blogspot.com/2012/04/byte-order-fallacy.html */

return ((uint64_t)p[0] << 0) |

((uint64_t)p[1] << 8) |

((uint64_t)p[2] << 16) |

((uint64_t)p[3] << 24) |

((uint64_t)p[4] << 32) |

((uint64_t)p[5] << 40) |

((uint64_t)p[6] << 48) |

((uint64_t)p[7] << 56);

}

We also need a function to advance the bitstream to the next byte boundary:

/* Round x up to the next multiple of m, which must be a power of 2. */

static inline size_t round_up(size_t x, size_t m)

{

assert((m & (m - 1)) == 0 && "m must be a power of two");

return (x + m - 1) & (size_t)(-m); /* Hacker's Delight (2nd), 3-1. */

}

/* Align the input stream to the next 8-bit boundary and return a pointer to

* that byte, which may be the past-the-end-of-stream byte. */

static inline const uint8_t *istream_byte_align(istream_t *is)

{

const uint8_t *byte;

assert(is->bitpos <= is->bitpos_end && "Not past end of stream.");

is->bitpos = round_up(is->bitpos, 8);

byte = &is->src[is->bitpos / 8];

assert(byte <= is->end);

return byte;

}

For the output bitstream, we write bits using a read-modify-write sequence. In the fast case, a bit write can be done by a 64-bit read, some bit operations, and a 64-bit write.

/* Output bitstream. */

typedef struct ostream_t ostream_t;

struct ostream_t {

uint8_t *dst;

uint8_t *end;

size_t bitpos;

size_t bitpos_end;

};

/* Initialize an output stream to write LSB-first bits into dst[0..n-1]. */

static inline void ostream_init(ostream_t *os, uint8_t *dst, size_t n)

{

os->dst = dst;

os->end = dst + n;

os->bitpos = 0;

os->bitpos_end = n * 8;

}

/* Get the current bit position in the stream. */

static inline size_t ostream_bit_pos(const ostream_t *os)

{

return os->bitpos;

}

/* Return the number of bytes written to the output buffer. */

static inline size_t ostream_bytes_written(ostream_t *os)

{

return round_up(os->bitpos, 8) / 8;

}

/* Write n bits to the output stream. Returns false if there is not enough room

* at the destination. */

static inline bool ostream_write(ostream_t *os, uint64_t bits, size_t n)

{

uint8_t *p;

uint64_t x;

size_t shift, i;

assert(n <= 57);

assert(bits <= ((uint64_t)1 << n) - 1 && "Must fit in n bits.");

if (os->bitpos_end - os->bitpos < n) {

/* Not enough room. */

return false;

}

p = &os->dst[os->bitpos / 8];

shift = os->bitpos % 8;

if (os->end - p >= 8) {

/* Common case: read and write 8 bytes in one go. */

x = read64le(p);

x = lsb(x, shift);

x |= bits << shift;

write64le(p, x);

} else {

/* Slow case: read/write as many bytes as are available. */

x = 0;

for (i = 0; i < (size_t)(os->end - p); i++) {

x |= (uint64_t)p[i] << (i * 8);

}

x = lsb(x, shift);

x |= bits << shift;

for (i = 0; i < (size_t)(os->end - p); i++) {

p[i] = (uint8_t)(x >> (i * 8));

}

}

os->bitpos += n;

return true;

}

/* Write a 64-bit value x to dst in little-endian byte order. */

static inline void write64le(uint8_t *dst, uint64_t x)

{

dst[0] = (uint8_t)(x >> 0);

dst[1] = (uint8_t)(x >> 8);

dst[2] = (uint8_t)(x >> 16);

dst[3] = (uint8_t)(x >> 24);

dst[4] = (uint8_t)(x >> 32);

dst[5] = (uint8_t)(x >> 40);

dst[6] = (uint8_t)(x >> 48);

dst[7] = (uint8_t)(x >> 56);

}

We also want an efficient way of writing bytes to the stream. One could of course perform repeated 8-bit writes, but using memcpy is much faster:

/* Align the bitstream to the next byte boundary, then write the n bytes from

src to it. Returns false if there is not enough room in the stream. */

static inline bool ostream_write_bytes_aligned(ostream_t *os,

const uint8_t *src,

size_t n)

{

if (os->bitpos_end - round_up(os->bitpos, 8) < n * 8) {

return false;

}

os->bitpos = round_up(os->bitpos, 8);

memcpy(&os->dst[os->bitpos / 8], src, n);

os->bitpos += n * 8;

return true;

}

Decompression (Inflation)

Since the compression algorithm is called Deflate—to let the air out of something—the decompression process is sometimes referred to as Inflation. Studying this process first will give us an understanding of how the format works. The code is available in the first part of deflate.h and deflate.c, bits.h, tables.h, and tables.c (generated by generate_tables.c).

Deflate-compressed data is stored as a series of blocks. Each block starts with a 3-bit header where the first (least significant) bit is set if this is the final block of the series, and the other two bits indicate the block type.

There are three block types: uncompressed (0), compressed with fixed Huffman codes (1) and compressed with "dynamic" Huffman codes (2).

The following code drives the decompression, relying on helper functions for the different block types which will be implemented further below.

typedef enum {

HWINF_OK, /* Inflation was successful. */

HWINF_FULL, /* Not enough room in the output buffer. */

HWINF_ERR /* Error in the input data. */

} inf_stat_t;

/* Decompress (inflate) the Deflate stream in src. The number of input bytes

used, at most src_len, is stored in *src_used on success. Output is written

to dst. The number of bytes written, at most dst_cap, is stored in *dst_used

on success. src[0..src_len-1] and dst[0..dst_cap-1] must not overlap. */

inf_stat_t hwinflate(const uint8_t *src, size_t src_len, size_t *src_used,

uint8_t *dst, size_t dst_cap, size_t *dst_used)

{

istream_t is;

size_t dst_pos;

uint64_t bits;

bool bfinal;

inf_stat_t s;

istream_init(&is, src, src_len);

dst_pos = 0;

do {

/* Read the 3-bit block header. */

bits = istream_bits(&is);

if (!istream_advance(&is, 3)) {

return HWINF_ERR;

}

bfinal = bits & 1;

bits >>= 1;

switch (lsb(bits, 2)) {

case 0: /* 00: No compression. */

s = inf_noncomp_block(&is, dst, dst_cap, &dst_pos);

break;

case 1: /* 01: Compressed with fixed Huffman codes. */

s = inf_fixed_block(&is, dst, dst_cap, &dst_pos);

break;

case 2: /* 10: Compressed with "dynamic" Huffman codes. */

s = inf_dyn_block(&is, dst, dst_cap, &dst_pos);

break;

default: /* Invalid block type. */

return HWINF_ERR;

}

if (s != HWINF_OK) {

return s;

}

} while (!bfinal);

*src_used = (size_t)(istream_byte_align(&is) - src);

assert(dst_pos <= dst_cap);

*dst_used = dst_pos;

return HWINF_OK;

}

Non-Compressed Deflate Blocks

The simplest block type is the non-compressed or "stored" block. It begins at the next 8-bit boundary of the bitstream, with a 16-bit word (len) indicating the length of the block, followed by another 16-bit word (nlen) which is the ones' complement (all bits inverted) of len. The idea is presumably that nlen acts as a simple checksum of len: if the file is corrupted, it is likely that the values are no longer each others' complements, and the program can detect the error.

After len and nlen follows the non-compressed data. Because the block length is a 16-bit value, it is limited to 65,535 bytes.

static inf_stat_t inf_noncomp_block(istream_t *is, uint8_t *dst,

size_t dst_cap, size_t *dst_pos)

{

const uint8_t *p;

uint16_t len, nlen;

p = istream_byte_align(is);

/* Read len and nlen (2 x 16 bits). */

if (!istream_advance(is, 32)) {

return HWINF_ERR; /* Not enough input. */

}

len = read16le(p);

nlen = read16le(p + 2);

p += 4;

if (nlen != (uint16_t)~len) {

return HWINF_ERR;

}

if (!istream_advance(is, len * 8)) {

return HWINF_ERR; /* Not enough input. */

}

if (dst_cap - *dst_pos < len) {

return HWINF_FULL; /* Not enough room to output. */

}

memcpy(&dst[*dst_pos], p, len);

*dst_pos += len;

return HWINF_OK;

}

Fixed Huffman Code Deflate Blocks

Compressed Deflate blocks use Huffman codes to represent a sequence of LZ77 literals and back references terminated by an end-of-block marker. One Huffman code, the litlen code, is used for literals, back reference lengths, and the end-of-block marker. A second code, the dist code, is used for back reference distances.

The litlen code encodes values between 0 and 285. Values 0 through 255 represent literal bytes, 256 is the end-of-block marker, and values 257 through 285 represent back reference lengths.

Back references are between 3 and 258 bytes long. The litlen value determines a base length to which zero or more extra bits from the stream are added to get the full length according to the table below. For example, a litlen value of 269 indicates a base length of 19 and two extra bits. Adding the next two bits from the stream yields a final length between 19 and 22.

| Litlen | Extra Bits | Length(s) |

|---|---|---|

| 257 | 0 | 3 |

| 258 | 0 | 4 |

| 259 | 0 | 5 |

| 260 | 0 | 6 |

| 261 | 0 | 7 |

| 262 | 0 | 8 |

| 263 | 0 | 9 |

| 264 | 0 | 10 |

| 265 | 1 | 11–12 |

| 266 | 1 | 13–14 |

| 267 | 1 | 15–16 |

| 268 | 1 | 17–18 |

| 269 | 2 | 19–22 |

| 270 | 2 | 23–26 |

| 271 | 2 | 27–30 |

| 272 | 2 | 31–34 |

| 273 | 3 | 35–42 |

| 274 | 3 | 43–50 |

| 275 | 3 | 51–58 |

| 276 | 3 | 59–66 |

| 277 | 4 | 67–82 |

| 278 | 4 | 83–98 |

| 279 | 4 | 99–114 |

| 280 | 4 | 115–130 |

| 281 | 5 | 131–162 |

| 282 | 5 | 163–194 |

| 283 | 5 | 195–226 |

| 284 | 5 | 227–257 |

| 285 | 0 | 258 |

(Note that litlen value 284 plus five extra bits could actually represents lengths 227–258, but the specification indicates that 258, the maximum back reference length, should be represented using a separate litlen value. This is presumably to allow for a shorter encoding in cases where the maximum length is common.)

The decompressor uses a table that maps from litlen value (minus 257) to base length and extra bits:

/* Table of litlen symbol values minus 257 with corresponding base length

and number of extra bits. */

struct litlen_tbl_t {

uint16_t base_len : 9;

uint16_t ebits : 7;

};

const struct litlen_tbl_t litlen_tbl[29] = {

/* 257 */ { 3, 0 },

/* 258 */ { 4, 0 },

...

/* 284 */ { 227, 5 },

/* 285 */ { 258, 0 }

};

The fixed litlen Huffman code is a canonical code using the following codeword lengths (286–287 are not valid litlen values, but they participate in the code construction):

| Litlen values | Codeword length |

|---|---|

| 0–143 | 8 |

| 144–255 | 9 |

| 256–279 | 7 |

| 280–287 | 8 |

The decompressor keeps those lengths in a table suitable for passing to huffman_decoder_init:

const uint8_t fixed_litlen_lengths[288] = {

/* 0 */ 8,

/* 1 */ 8,

...

/* 287 */ 8,

};

Back reference distances, ranging from 1 to 32,768, are encoded using a scheme similar to the one for lengths. The dist Huffman code encodes values between 0 and 29, each corresponding to a base length to which a number of extra bits are added to get the final distance:

| Dist | Extra Bits | Distance(s) |

|---|---|---|

| 0 | 0 | 1 |

| 1 | 0 | 2 |

| 2 | 0 | 3 |

| 3 | 0 | 4 |

| 4 | 1 | 5–6 |

| 5 | 1 | 7–8 |

| 6 | 2 | 9–12 |

| 7 | 2 | 13–16 |

| 8 | 3 | 17–24 |

| 9 | 3 | 25–32 |

| 10 | 4 | 33–48 |

| 11 | 4 | 49–64 |

| 12 | 5 | 65–96 |

| 13 | 5 | 97–128 |

| 14 | 6 | 129–192 |

| 15 | 6 | 193–256 |

| 16 | 7 | 257–384 |

| 17 | 7 | 385–512 |

| 18 | 8 | 513–768 |

| 19 | 8 | 769–1024 |

| 20 | 9 | 1025–1536 |

| 21 | 9 | 1537–2048 |

| 22 | 10 | 2049–3072 |

| 23 | 10 | 3073–4096 |

| 24 | 11 | 4097–6144 |

| 25 | 11 | 6145–8192 |

| 26 | 12 | 8193–12288 |

| 27 | 12 | 12289–16384 |

| 28 | 13 | 16385–24576 |

| 29 | 13 | 24577–32768 |

The fixed dist code is a canonical Huffman code where all codewords are 5 bits long. Although trivial, the decompressor keeps it in a table so that it can be used with huffman_decoder_init (dist values 30–31 are not valid, but are specified as participating in the Huffman code construction, though they do not have any effect):

const uint8_t fixed_dist_lengths[32] = {

/* 0 */ 5,

/* 1 */ 5,

...

/* 31 */ 5,

};

The code for decompressing, or inflating, a fixed Huffman code deflate block is shown below.

static inf_stat_t inf_fixed_block(istream_t *is, uint8_t *dst,

size_t dst_cap, size_t *dst_pos)

{

huffman_decoder_t litlen_dec, dist_dec;

huffman_decoder_init(&litlen_dec, fixed_litlen_lengths,

sizeof(fixed_litlen_lengths) /

sizeof(fixed_litlen_lengths[0]));

huffman_decoder_init(&dist_dec, fixed_dist_lengths,

sizeof(fixed_dist_lengths) /

sizeof(fixed_dist_lengths[0]));

return inf_block(is, dst, dst_cap, dst_pos, &litlen_dec, &dist_dec);

}

#define LITLEN_EOB 256

#define LITLEN_MAX 285

#define LITLEN_TBL_OFFSET 257

#define MIN_LEN 3

#define MAX_LEN 258

#define DISTSYM_MAX 29

#define MIN_DISTANCE 1

#define MAX_DISTANCE 32768

static inf_stat_t inf_block(istream_t *is, uint8_t *dst, size_t dst_cap,

size_t *dst_pos,

const huffman_decoder_t *litlen_dec,

const huffman_decoder_t *dist_dec)

{

uint64_t bits;

size_t used, used_tot, dist, len;

int litlen, distsym;

uint16_t ebits;

while (true) {

/* Read a litlen symbol. */

bits = istream_bits(is);

litlen = huffman_decode(litlen_dec, (uint16_t)bits, &used);

bits >>= used;

used_tot = used;

if (litlen < 0 || litlen > LITLEN_MAX) {

/* Failed to decode, or invalid symbol. */

return HWINF_ERR;

} else if (litlen <= UINT8_MAX) {

/* Literal. */

if (!istream_advance(is, used_tot)) {

return HWINF_ERR;

}

if (*dst_pos == dst_cap) {

return HWINF_FULL;

}

lz77_output_lit(dst, (*dst_pos)++, (uint8_t)litlen);

continue;

} else if (litlen == LITLEN_EOB) {

/* End of block. */

if (!istream_advance(is, used_tot)) {

return HWINF_ERR;

}

return HWINF_OK;

}

/* It is a back reference. Figure out the length. */

assert(litlen >= LITLEN_TBL_OFFSET && litlen <= LITLEN_MAX);

len = litlen_tbl[litlen - LITLEN_TBL_OFFSET].base_len;

ebits = litlen_tbl[litlen - LITLEN_TBL_OFFSET].ebits;

if (ebits != 0) {

len += lsb(bits, ebits);

bits >>= ebits;

used_tot += ebits;

}

assert(len >= MIN_LEN && len <= MAX_LEN);

/* Get the distance. */

distsym = huffman_decode(dist_dec, (uint16_t)bits, &used);

bits >>= used;

used_tot += used;

if (distsym < 0 || distsym > DISTSYM_MAX) {

/* Failed to decode, or invalid symbol. */

return HWINF_ERR;

}

dist = dist_tbl[distsym].base_dist;

ebits = dist_tbl[distsym].ebits;

if (ebits != 0) {

dist += lsb(bits, ebits);

bits >>= ebits;

used_tot += ebits;

}

assert(dist >= MIN_DISTANCE && dist <= MAX_DISTANCE);

assert(used_tot <= ISTREAM_MIN_BITS);

if (!istream_advance(is, used_tot)) {

return HWINF_ERR;

}

/* Bounds check and output the backref. */

if (dist > *dst_pos) {

return HWINF_ERR;

}

if (round_up(len, 8) <= dst_cap - *dst_pos) {

lz77_output_backref64(dst, *dst_pos, dist, len);

} else if (len <= dst_cap - *dst_pos) {

lz77_output_backref(dst, *dst_pos, dist, len);

} else {

return HWINF_FULL;

}

(*dst_pos) += len;

}

}

Note that as an optimization when there is enough room in the output buffer, we output back references using the routine below which copies 64 bits at a time. It is "sloppy" in the sense that it often copies a few extra bytes (to the next multiple of 8), but it is much faster than lz77_output_backref since it needs fewer loop iterations and memory accesses. In fact, short back references will now all be handled by a single iteration, which is great for branch prediction.

/* Output the (dist,len) backref at dst_pos in dst using 64-bit wide writes.

There must be enough room for len bytes rounded to the next multiple of 8. */

static inline void lz77_output_backref64(uint8_t *dst, size_t dst_pos,

size_t dist, size_t len)

{

size_t i;

uint64_t tmp;

assert(len > 0);

assert(dist <= dst_pos && "cannot reference before beginning of dst");

if (len > dist) {

/* Self-overlapping backref; fall back to byte-by-byte copy. */

lz77_output_backref(dst, dst_pos, dist, len);

return;

}

i = 0;

do {

memcpy(&tmp, &dst[dst_pos - dist + i], 8);

memcpy(&dst[dst_pos + i], &tmp, 8);

i += 8;

} while (i < len);

}

Dynamic Huffman Code Deflate Blocks